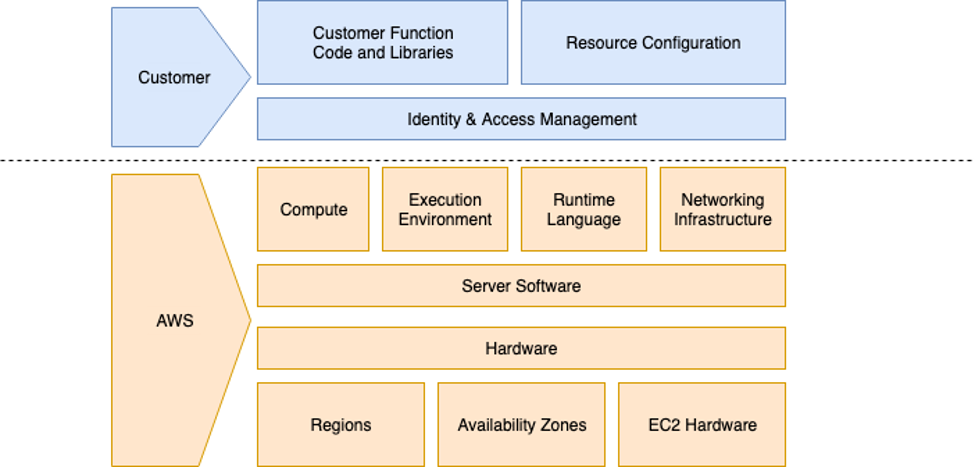

Security “in” the cloud — The Customer’s Responsibilities

Moving on to the top half of the shared responsibility model, we’ll focus on the Customer’s responsibilities. Resource Configuration, Customer Function Code and Libraries, and Identity & Access Management.

Resource Configuration:

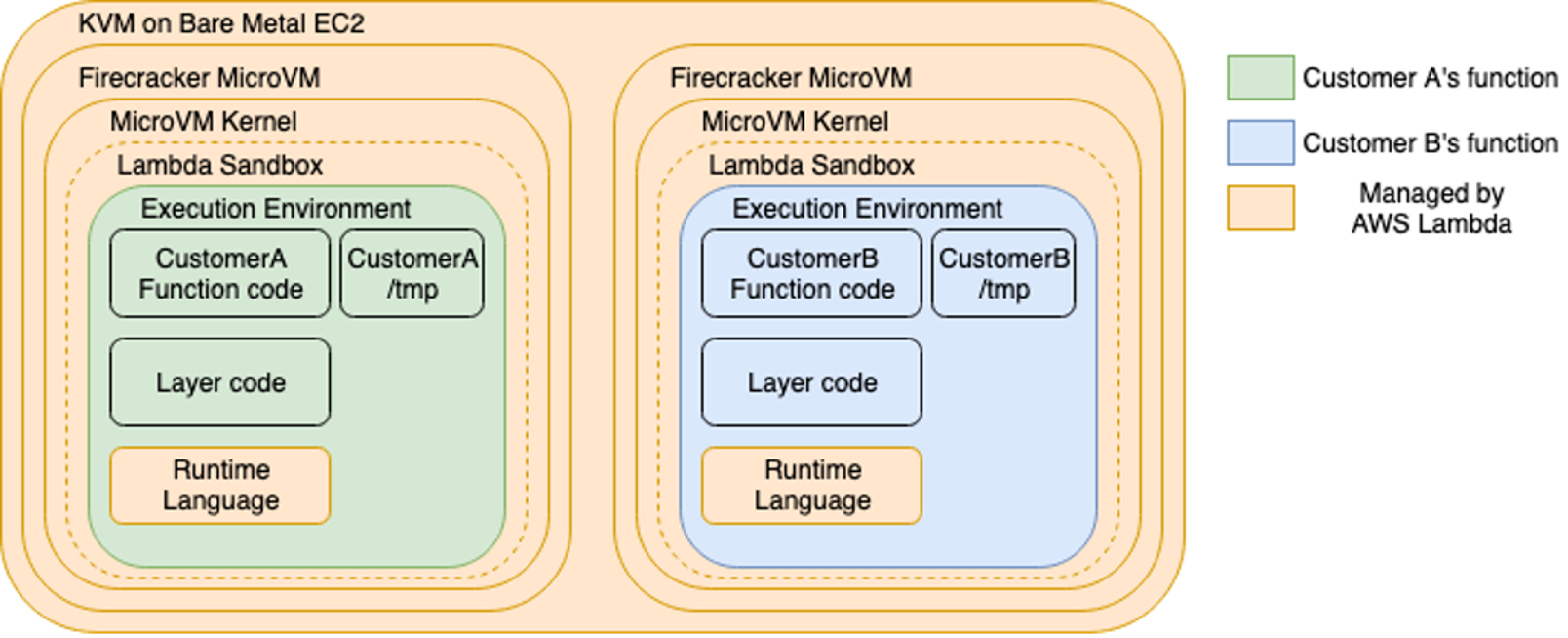

Outside of the configuration of the resources — memory, timeout, and concurrency — another feature of Lambda is the reusable, 512MB writable /tmp directory. The same execution context — including the /tmp directory — might be reused. Although it can serve as the basis of reducing the execution time of a Lambda, by e.g., downloading a machine learning model and then effectively caching it. This pattern has been used to chain vulnerabilities and gain persistence on an otherwise ephemeral environment. The Customer might need to restrict the conditions, on which the /tmp directory is reused.

Two more areas which we’ll include as part of the resource configuration are environment variables and secrets. Although these topics overlap with IAM and function code, it’s worth mentioning that environment variables are part of Lambda’s function configuration. You can get the content of the environment variables of a function with `get-function-configuration`. This can be problematic if your secrets management pattern involves storing secrets in environment variables. You’ll most likely need to consider using AWS KMS or an alternative pattern altogether.

Customer Function Code and Libraries:

Lambda runtime, code and any other libraries are the Customer’s responsibility. They largely fall under the following categories:

- Vulnerable libraries and code

- Lambda layers and their permissions

Vulnerabilities and functionality of the Customer’s code are their responsibility. Secure software development processes apply. Static code analysis, dependency scanning, reviews, etc.. In addition, Lambda layers and their respective IAM permissions need to be fine-tuned, if in place. Lambda layers allow you to package any libraries and runtimes as a ZIP file. You can then configure a function to use this layer, without needing to package it every time. This is a great feature that allows code to be reused and increases the flexibility of Lambda. They also come with their own set of permissions, that can allow other IAM identities (users, roles, groups) to update them. For complex deployments or to enforce a gatekeeper integrity control, you might want to consider code signing.

Identity & Access Management

The Lambda Resource Policy

Since Lambdas can be triggered by different services, they also have their own resource policy. If you define a resource policy with a Principal of ‘*’ without any conditions, anyone can invoke the Lambda, if they know the ARN. In that regard if you’re not familiar with the AWS IAM model you can think of Lambda as an S3 bucket that can execute code. The only IAM permission required in order to invoke a lambda function is “lambda:InvokeFunction”. Lambda integrates with approximately 140 AWS Services. This increases the flexibility by allowing cross-account or even anonymous invocation, however, it also increases the attack surface.

If an IAM Identity within your account is allowed to add an integration, then it can invoke the Lambda. Make sure that you limit what can change a Lambda’s resource policy and that you have visibility of which Identity or AWS Service can invoke your Lambdas.

The Lambda Execution Role

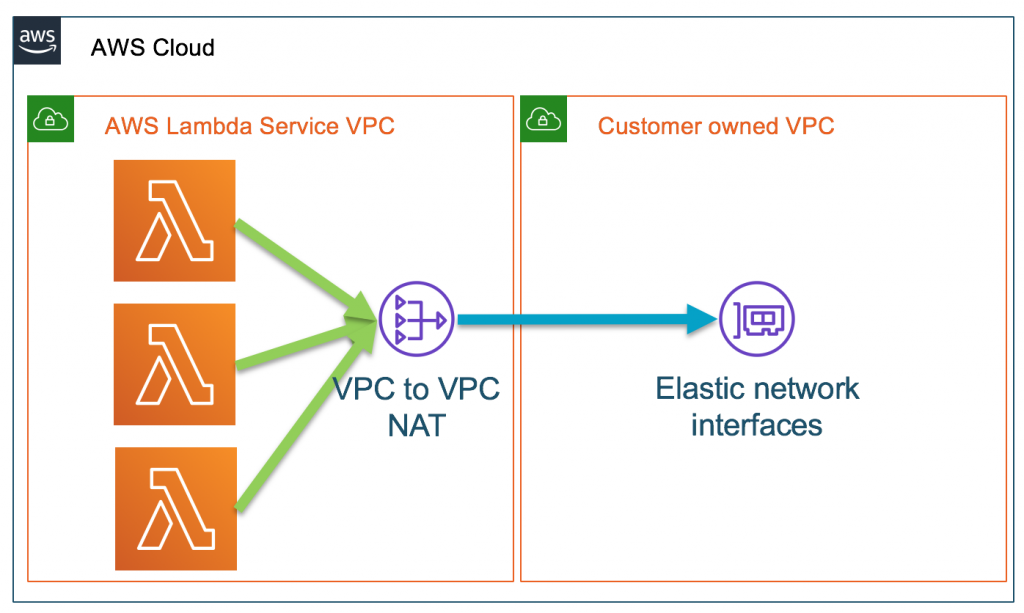

The Lambda service will need to be able to create ENIs in our VPC, it means that it will need to:

- Create and Delete ENIs

- Assign and Unassign private IP addresses

This is evident in the default minimum permissions managed policy `AWSLambdaVPCAccessExecutionRole`. This policy is also used by the Serverless framework by default when deploying inside a VPC. The limitations on the resource cannot be established easily which is why the default policy appears to be quite permissive. However, you can limit deployment using permissions boundaries or specific IAM Conditions which can limit deployments to a specific set of subnets.

Finally treating Lambdas as a resource, we are able to trigger other Lambdas. If your function or role is able to invoke any function, it can be problematic, especially when considering a cross-account context. E.g., the managed policy `AWSLambdaRole` allows you to trigger functions in other AWS Accounts as well (of course, the Resource Policy of the lambda function that you want to run comes into play). This could be utilised as an exfiltration method, and the CloudTrail entry of that invoke will not even be in your CloudTrail as it is not your function that is being run.

Deployment

Deployment roles depend on the AWS and Amazon services that the application needs. If you’re deploying using CloudFormation you can query the CloudTrail events, of your already deployed stack, as a starting point.

Going back to our VPC requirement, we will also need to ensure that Lambda deployments are limited only to specific VPCs and their respective resources. This way we can enforce the environments in which deployments of Lambda functions can take place. This is achieved by deploying a Service Control Policy and/or an IAM Boundary Policy.

We’ve built a solution to showcase what this can look like. You can also use as a starting point when building your own secure reusable pattern. Take a look at our Github repo. We’re using the Serverless framework to manage the deployment of the infrastructure and packaging of the application.

The infrastructure is defined in CloudFormation but deployed using the Serverless framework. This way we separate the infrastructure, and application code, whilst keeping everything in one place. We can then reuse the infrastructure components, e.g. Subnets, to reference where our Lambdas will be deployed.

Conclusion

We’ve talked about the core areas of the shared responsibility model and what you’ll need to consider when securing and operating workloads using AWS Lambda. Although you might be thinking that by deploying a Lambda inside a VPC, you’ll be missing out on the flexibility, once you establish your applications’ trust boundaries and services, you can build reusable foundations.

The Good — We love the flexibility of Lambda and IAM conditions can limit you to launching in a VPC — SAM and the Serverless framework make Lambda development a breeze.

The Bad — You will most likely need to provide a secure network boundary that includes VPC endpoint policies to limit what resources can be accessed.

The Ugly — There is groundwork that needs to be done before developing Lambdas in a VPC. This might create a steep learning curve for all the components that will need to be put in place. We’ll cover VPC endpoints and VPC endpoint services in the next article.

Although you might need to invest in building the foundations when deploying Lambdas to a VPC, you can reuse and extend the infrastructure once you’re comfortable with how your workload operates, resulting in your entire infrastructure having a greater level of security. The shared responsibility model is yet another way of framing most security, compliance, and operational dialogs within an organisation.